![]() The ELK stack provides a very powerful set of tools that allow administrators and developers unfettered access and customizable log aggregation across any manner of server, application or device. Recently I needed to stand up a new ELK stack on a new environment and ran into an “Unable to fetch mapping, Do you have indices matching the pattern?” error. This is how I fixed it.

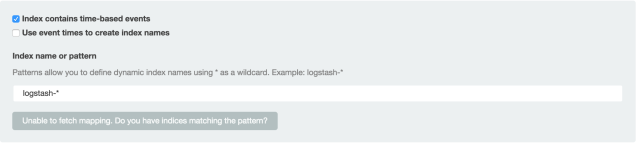

The ELK stack provides a very powerful set of tools that allow administrators and developers unfettered access and customizable log aggregation across any manner of server, application or device. Recently I needed to stand up a new ELK stack on a new environment and ran into an “Unable to fetch mapping, Do you have indices matching the pattern?” error. This is how I fixed it.

After setting up ELK with the following software versions I had an issue with indexing in Elasticsearch with the syslog filter shipped with version 2.1.0 of Logstash.

- Red Hat Enterprise Linux 7.2 VM (8cpu, 32g mem)

- Elasticsearch 2.1.0

- Logstash 2.1.0

- Kibana 4.3.0

- java-1.8.0-openjdk-headless-1.8.0.65-3.b17

The Problem

I couldn’t create a pattern to match without an initial index and it wouldn’t let me create one without sending logs to it (and you can’t send logs to it via the forwarder as it doesn’t have an index/pattern setup yet).

The Solution

The default, shipped Logstash syslog configuration wasn’t acceptable because it referenced fields that didn’t exist or maybe I messed something else up. Either way, keeping it simple always prevails.

The solution that worked for me was to manually create an index calling the elasticsearch CLI, but I had to make some adjustments to the syslog filter first.

Create a more simplistic syslog filter like below, you can backup the existing logstash-shipped ones elsewhere and use them after you have working indices later if you wish.

- file: /etc/logstash/conf.d/10-syslog.conf

input {

stdin {

type => "syslog"

}

}

output {

stdout {codec => rubydebug }

elasticsearch {

hosts => "localhost:9200"

}

}

Next, you can use local logs to create your index – do this by calling the Elasticsearch CLI. In this example I simply used the local /var/log/messages.

cat /var/log/messages | /opt/logstash/bin/logstash -f \ /etc/logstash/conf.d/10-syslog.conf

You should see messages letting you know that log entries are successfully created.

"message" => "Nov 30 13:50:06 bullwinkle systemd: Started

The nginx HTTP and reverse proxy server.",

"@version" => "1",

"@timestamp" => "2015-11-30T13:50:44.896Z",

"type" => "syslog",

"host" => "bullwinkle.example.com"

Refresh your Kibana interface and you should see “Unable to fetch mapping” error go away.

At this point you should have an existing index and you can proceed with creating an index pattern and adding nodes via logstash-forwarder or normal syslog.

Tuning Heap Size

You probably want to increase the heap size if you’re throwing any serious amount of logs at your ELK instance. On RHEL7.2 I did the following, with an 32G VM using both a minimum/maximum heap size of 16G.

- General best practice denotes never setting heap size more than half of the amount of system memory so the rest can be used by Lucene.

- Also you probably want to set both min and max heap size to the same setting so it doesn’t resize at runtime.

- file: /usr/share/elasticsearch/bin/elasticsearch.in.sh

-- snip -- if [ "x$ES_MIN_MEM" = "x" ]; then ES_MIN_MEM=16g <--- change here fi if [ "x$ES_MAX_MEM" = "x" ]; then ES_MAX_MEM=16g <--- change here fi if [ "x$ES_HEAP_SIZE" != "x" ]; then ES_MIN_MEM=$ES_HEAP_SIZE ES_MAX_MEM=$ES_HEAP_SIZE fi -- snip --

Tuning Swap Behavior

You may also want to either disable swap entirely or set vm swappiness to in sysctl, which will make the system only swap in emergency situations.

echo "vm.swappiness = 1" >> /etc/sysctl.conf

sysctl -p

Tuning Memory Mapping Behavior

Elasticsearch uses a hybrid mmapfs/niofs directory structure by default to store indices, on some Linux systems this might be set too low. You can increase the vm.max_map_count setting in systctl to increase this.

echo "vm.max_map_count = 262144" >> /etc/sysctl.conf

sysctl -p

Increasing System Limits

Elasticsearch can get resource hungry for memory and open files, usually it’s recommended to dedicate one machine or beefy VM for this purpose. You might want to give the elasticsearch user full reign on system resources as well.

cat >> /etc/security/limits.conf << EOF elasticsearch soft memlock unlimited elasticsearch hard memlock unlimited elasticsearch nofile 65535 EOF

Tuning Index Cache Size

You may also elect to tune/limit the index cache size. By default there’s no limit to this (so data is ever evicted) which might be nice for smaller environments but on larger environments even with a single index if you exceed the circuit breaker setting (field data limit at which queries exceeding it will result in out of memory errors – default is 60% of the heap size) you’ll no longer be able to load anymore fielddata. Remember that the fielddata.cachesize needs to be set less than the indices.breaker.fielddata.limit circuit breaker setting or no data will ever be evicted.

Below I’m setting my fielddata cache size to 55% of the heap size, so for a 16G heap that is approximately 8.8G.

echo "indices.fielddata.cache.size: 55%" >> \ /etc/elasticsearch/elasticsearch.yml

systemctl restart elasticsearch.service

Logstash: Disable stdout Logging

One more thing you may want to do is turn off the stdout logging in logstash, this can create a gigantic logstash.stdout file as it by default logs every single item sent to it. For me it’s this file below, remove the offending line.

- file: /etc/logstash/conf.d/30-lumberjack-output.conf

output {

elasticsearch { hosts => ["localhost:9200"] }

stdout { codec => rubydebug } <---- remove the entire stdout line

}

systemctl logstash restart

More

As I do more with the ELK stack I’ll probably add to this post or write others about the subject.

The ELK stack provides an awesome set of tools that should be in anyone’s arsenal if you’re managing more than even a few servers, applications and devices.

There’s a lot to it, and this simple post just solves a problem I ran into – I hope it helps someone else who comes across it.

Hey, to set the memory just set the ES_HEAP_SIZE env var. Also use elasticsearch 2.x where you no longer need fielddata that much so you can actually skip that step too here.

LikeLiked by 1 person

Thanks man! This helped me a lot this year, as i needed to “forcefully” upload some files to my ELK node.

LikeLike